Phidget sensors can be used to make something happen in Delta, often by acting as triggers to Delta sequences.

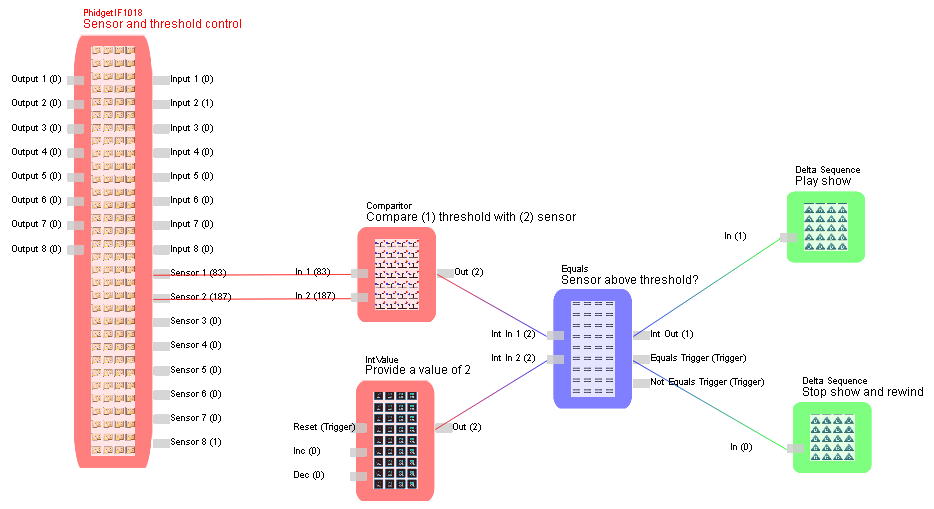

Sensor with threshold setting plays and stops a show

Let’s start with a Phidget Interface kit, to which we connect a variable manual control (e.g. a slider) and a sensor (motion, light or sound). The example interface is the 8/8/8 with 8 sensor inputs, and 8 digital in and out.

The manual control (Sensor 1 input to the interface) will allow us to set a threshold value to set against the light sensor (Sensor 2 input) value, and create a trigger.

This example can be seen below. Sensor 1 on the interface kit is the manual control and Sensor 2 is a light or sound sensor. The Delta Sequences (green, on the right) have already been written from DeltaGUI to gracefully start and stop a show timeline. In this way a variable sensor value is converted into a simple on/off trigger to run a sequence that begins a series of steps in Delta.

Sensor 1 and Sensor 2 outputs are first compared for which has the greater value. The result is either 1 or 2. By equating this with an integer value of 2, we can trigger a Delta Sequence to play the show when the value of Sensor 2 falls below the threshold (Sensor 1). If Sensor 2 rises above the threshold value (as below) an Equals trigger is sent to another Delta Sequence to stop the show and rewind it.

Note how by applying node names you can better explain (or remind yourself) of the logic.

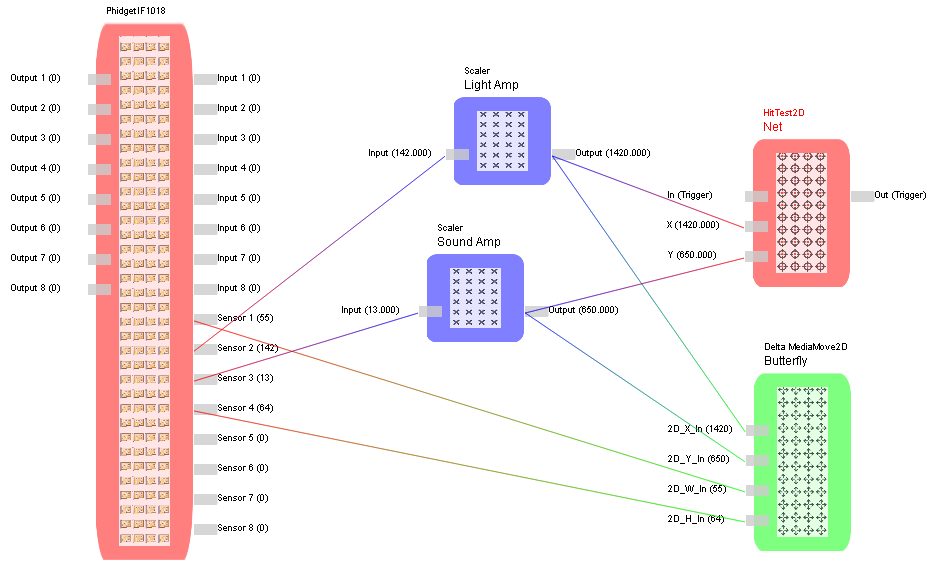

Use sensors to move media on-screen

In this example, from a Phidget kit on the left, a light sensor (2) and a sound sensor (3) are used to move a piece of media around, using the green Delta MediaMove node: it might be catching a butterfly in a net on a display screen. The size of the media (the butterfly, for example) can be changed by two analogue controls (or it could have been just one, to be proportional), but the position co-ordinates need to be amplified with Scaler nodes for sufficient movement on screen. The target area (a HitTest2D node, here called Net) is fed the same positional outputs. Getting the butterfly in the net can then trigger some other event from the HitTest, whether a sound, or a sequence in Delta, or another process.